Previous chapter: Mediators -

Previous chapter: Mediators -

Next chapter: The Future

Next chapter: The Future On the Information Superhighway of the Future, What Feature Would You Most Like To See?". "Clean Restrooms" [Shoe, in comic strip by Jeff MacNelly, 15Dec1993].

Previous chapter: Mediators -

Previous chapter: Mediators -

Next chapter: The Future

Next chapter: The Future

Security is a major issue as we open op the information highways to travelers of all types, and from all countries. Without being to provide an adequate level of security travelers will be wary of using the highways. Without some guaranteed of securiy businesses will not committ their valuable wares to transport over the highways. Losing goods in transportation is serious and easily noticed. Security on the digital highways has also to deal with copying of information, a threat that in the traditional world was limited to plagiarizing books and using patent information without a license.

Secure information systems have three obligations:

Privacy protection remains a major concern. Public demands have instigated a variety of laws, and again the technical tools that are available do not match the legal requirements for access and protection of privacy [Rotenberg:93]. The punishment for theft and other transgressions has been traditionally the levying of fines or the loss of freedom, namely going to jail. What suitable punishments in the information world? Already today traffc fines can be paid by credit card, and * e-money will do as well. In either case, the punishing authority gets its funds instantly, the convict, if poor, gets hit with additional finance charges. These issues are addressed in the Chapter on Electronic Commerce.

Loss of freedom could simply mean loss of passwords that give access to desirable information. Instead of jail, some convicts could be given the equivalent of * house arrest, with a requirement to connect ever so often to the warden or the parole officer's computer. Schemes for validation of the convicts actual presence at the designated on-line site have to be developed since the traditional password is portable. Today a virtual presence is easy to establish, by calling one's home computer from a remote spot and then connecting through it to other sites. Current schemes of passwords and encryption have focused on identifying people for whom a protected password was an economic advantage, as in using * ATMs.

We describe an early experiment with site-based identification in Sect. Kiosk. To what extent * digital jails can replace physical ones cannot be guessed today; but any relief of the patently unsatisfactory method of punishing people by housing and feeding them in public facilities is worthy of investigation.

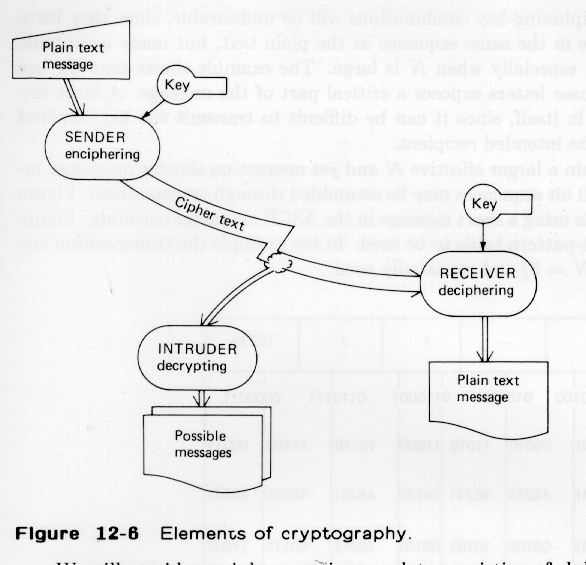

The * Ceasar Cipher, illustrated in Fig. Ceasar shows the elements associated with transmitting and breaking ciphered messages. There is an enciphering device, which takes * plain text and combines it with a key to yield cipher text. There is a similar decoding device, which takes cipher text and combines it with the same key to recover plain text. And then there is the crook, who tries to decrypt the cipher text, by analyzing the cipher text, making likely guesses. The crook succeeds when a guess yields something that makes sense. The substitution cipher shown is trivial, and literate barbarians should be able to decrypt such messages.

Transmitter: plain text : "BEWARE_THE_IDES_OF_MARCH" Enciphering with key "4" cipher text: "FI_EVIDXMIDNHIWDTKDRGM" using a 27 symbol alphabet ---------------- Crook: Decrypting without a key Guess: frequent are blanks, the most frequent character in the cipher text is "D", hence D =_, now deduce that ... key=4, validate, "aha" ---------------- Receiver, or successful crook: Deciphering with key "4" plain text: "BEWARE_THE_IDES_OF_MARCH"

Ciphering became more sophisticated over time. !inventor?> Using a sequence of, say 10 numbers, as a key gave each one out of ten plain text characters a unique substitution key. The key length itself becomes another variable to be guessed by the decryptor. Such a key can be represented as text itself, and based on a citation, say Proverb 17 of the Bible. Using a larger alphabet for the cipher text permitted substituting of characters that are frequent in plain text by any one of several cipher text characters.

The invention of the telegraph provided electric encoding of characters and enabled automation of ciphering. By the start of the second world war ciphering machines were in use that had interchangable rotors, and the keys were reduced to instructions on which rotors to use. Germany used the Enigma machine to encode its messages, and lengthened its code word it in 1938, but Polish scientist determined how it was wired and delivered a copy to Great Britain. The longer code word motivated the development of automation in decrypting, and as such the development of computers. In general, machines and methods used in ciphering are difficult to keep secret, since they are used at many sites. The devices may be captured or their methods determined when some plain-text with corresponding cipher text is obtained. But without the key the search for reasonble plain text will take a excessive time, as discussed in the Section on Decryption. In most military situations decryption is only useful if it is rapid.

Managing keys securely is a major effort, and the loss of a key causes all protection to be lost. For that reason keys used in critical military operations are changed regularily. Most active military operations change keys on daily basis. Printed * signal operations instructions, or *code books are produced by intelligence agencies, distributed to the military units, and every day a new key is set.

A major task for early computing machines was the decryption of messages send to German U-boats by British intelligence officials. The automation proved very effective and analysts working with machines as the * Enigma were able to provide the British Navy with plain text within a day [Turing ref]. In the Pacific the U.S. Navy was able to intercept some Japanese code books, illustrates a major weakness of ciphering: the keys have to be transmitted securely [Kahn:49]. Once a key is known all successive messages, including those designating a new key, can be rapidly decrypted.

The distribution and manual entry of key is error prone and created probrlems during Desert Storm in 1991. Recently the U.S. military has moved to the use of an electronic key generator, the Revised Battlefield Electronics Operation Instruction System (RBECS). Modern radios and computers can receive the keys by direct connection [Burgess:94]. . Only a small fraction of computer data is protected by ciphering. The awkwardness of managing keys and the delays imposed by decoding are strong disincentives. Most data is protected by requiring passwords to gain access.

The computer systems used at most sites are far from secure. The designer of a personal computer focuses on ease of use and high performance. Any damage done will only affect the owner. Even larger systems are envisaged for use by collaborating groups. mainframe computers, used by disparate owners will have more protection, but in the end it is the role of management to allocate resources and assure cooperation. To access another person's data files means having the right access permissions.

The *operating system, like the telephone and electric control rooms of modern buildings, provides the major opportunity for mischief. Current operating systems have unlimited privileges to the users' files, and so have the people that maintain the operating system, the * superusers. Once a user can enter the operating system, or mimick a superuser, there is no limit to the local destruction that can be perpetrated.

The major locking mechanism in use today is the password. Once an intruder obtains a user's name and password for some part of a system, it is often easy to break into other areas or the control system. Since computer programs can replicate themselves. like * viruses, they can leave a copy in each room entered. That program in turn can continue to attempt to break into other rooms and other structures. As the virus programs replicate they can unleash a tremendous amount of computing power and bring large segments of networks on their knees.

A well-known incident was perpetrated by !robert> Morris of Cornell University in 1989, who had created a virus that replicated itself among many sites. The virus program gained entry by trying various sets of words likely to be used as passwords: first names, permutations of the user name, and words in an English dictionary. That notion is similar to trying all likely combinations in a number-lock for your bicycle, but the virus program has two advantages: patience and the ability to work well-nigh unobserved. Only by noticing that computers were much more busy than they should be could one detect that the virus was at work. That virus did no intentional damage to the contents of the structures, but used up so much of the computing resources that it disabled many sites. In order to kill the virus computer sites had to quarantine themselves from the network, and be scrubbed clean, greatly disabling the work of many legitimate users

Partially in response to the break-in by Morris, ARPA established a a task force at Carnegie Mellon University: (CERT). This task force collects information on break ins and attempted break ins, monitors ongoing violations, and attempts to identify the perpetrators. Legal actions are often difficult, since the perpetrator may live in a country where breaking into a computer network is not seen as a criminal act. CERT reports a steadily increasing number of break-ins as the Internet grows in popularity: 1990: 100; 1991: 410; 1992:720; and 1993:1300. This rate of growth is actually less than the growth in the number of users, but we cannot tell if that is due to more honesty, better protection methods, or more naivitŠ among the users.

A resource for information about break-ins is Neumann95; Peter Neumann also edits a monthly A HREF="http://www.csl.sri.com/insiderisks.html">column in the Communications of the ACM. Unfortunately, it is more difficult to provide prescriptions for preventing break-ins than describing them. [denning]

In February 1994 a virus was discovered which collected passwords by intercepting messages on the Internet. as described in Chap.\I\F in order to gain access to a remote computer the name and password is transmitted. By recognizing remote login and file transfer (ftp) requests, the virus could collect passwords, presumbly for use in later break-ins [Washington Post, 4Feb1994]

!ref Health Data in the Information age == buy , Michel Billelo>

Figure\securebenefits The tradeoff between security and benefits [Ashok Chandra, lecture at Stanford 14Feb1995]

The mandatory levels are well known:

The technology to support security is based on two concepts: identifying who has access rights and what is to be accessed, and creating a barrier between the two that is well controlled. The identification must be reliable, else any other security measure is compromised. The barrier must be well controlled, so that only identified and approved accessors and objects can pass through. !see fence discussion below> Since the barrier requires careful maintenance, * mediation technology is useful. A security mediator would be owned and maintained by a security officer, and carry out its actions as an agent of the officer. !see below> If the security mediator accesses information at different levels of classification, it must be capable of managing * multi-level security. However, the disparate information sources it draws upon may stay at a single level, which simplifies their management.

We will deal first with the technical problems of identification, !!......

!!refer to from E-com chapter? A variant of *{public key encryption} can provide *authentication, assurance that a sender is indeed the legitimate source of a message. The sender also has a private (DKS) key and can derive from it a public key (PKS). Rather than just sending (PKR{m}) the sender sends (PKR{DKS{m}). After the recipient applies (DKR) to obtain (DKS{m}), which is then decoded by applying the senders public key (PKS) to obtain m. Applying a false (PAS) would result in garbage, informing the recipient that a false (DKS) has been used. !check,wrong?

Software for authentication forms the basis for digital signatures, used in *Signers, in *PGP, *Telescript, and *Kerberos.

!health card number>

An important choice in devising a PIN is the decision to let it have semantics or not. An example of an Identifier with semantics is the Dewey Decimal Code used for books. The initial numbers and letters provide a classification, the ones at the end are sequentially assigned. But classifications are not unique: is this book on communication, computing, or road transport? What do we do when classifications are refined, and should be split?

!!! example: . Where classifications become obsolete, as horse-drawn carriage building, much coding space may remain unused. Semantic PINs also compromise *privacy; the former French PIN, let anyone know what one's birthplace, birthdate, and birth gender were.

If a neutral PIN, without semantics, is used, a person, can be permanently associated with it. The same principle holds for general identifiers. For literary work we have encountered a !!xx>. Any object in general can be permanently associated with a suitable identifier. Retrieval, when coming from !the outside will rarely use identifiers, but attributes associated with them, as names, categories, locations, and the like, !as encountered in Chapter\X1,\X2,\P\GEO...>.

A common way to *break into computers has been to try lists of word externally, using a computer to try likely passwords. To reduce the chance of this type of break-in a number of counter-methods are in use:

!! Generated, copied from transmissions sniffer or Trojan Horse (easy on ethernet, listen to tranmissions) [ATM caper]

Watermarking modifies a document by overlaying a barely perceptible identifying image over the base image. When watermarked paper is used the identifying image is best seen by holding the document up to light. Copying the text only from a document looses the watermark. Using digital watermarking consists of changing low-order intensity-encoding bits in the image so that the identification is barely visible. Alterations in sections of the document will also destroy the watermarks. Hidden digital watermarking or stegonagraphy is a technique which hides the identifying image. Here the pattern of bits in a document or image is changed in a matter which is practically invisible. In a high-resolution picture the low-order bits may be changed, causing imperceptible change of color. Other techniques will slightly distort the document, moving some pixels by one position, or changing the spacing of characters and lines in a document. [[more See IEEE Computer Feb1998, Xerox]] These techniques keep the document freely accessible. Another level of protection is encryption, where the document is made inacessible to all but authenticated and authorized users. In an encrypted document checksums can be used for authentication, and since only authorized people have access, it can be assumed that nobody has altered the document and recomputed the checksum.

A solution, applicable to many situations is public key encryption .. A potential recipient broadcasts a key (PKR) to candidate senders, to be used in encoding any messages to the recipient. The (PKR) key, however, is not a key suitable for decoding, so that it does not have to be protected. The recipient keeps the decoding key (DKR). The recipient creates a (PKR) by a transformation of the (DKR) key, but the transformation function is as difficult to invert as other decryptions. The holder of a (PKR) key can hence send an encoded message m, but not decode a message (PKRm), that has been transformed.

The dominant mathematical foundation for public key transforms is factoring of large numbers. Given, say, a private key (DKR={3,7}), the public key (PKR = {21}). The encryption method is constructed so that 3 and 7 have to applied sequentially to decode the message encoded with 21. While it is easy to guess 3 and 7 from 21, guessing of source factors becomes extremely difficult when applied to products of many and large factors.

A patent for public key encryption is held commercially by RSA Data Security, but the software is available from several sources. An attempt to ban export of the software to non-U.S. companies seems to have failed, illustrating how difficult it is to keep intellectual property on local highways.

The need to trap criminal activities is real, and the desire to have access to criminal activities which pass along the information highways is sincere. But a government attempt to enforce the use of a breakable encryption method is swimming against the stream [Seabrook:94]. Since encryption can be performed by software as well as by hardware, the trapdoor feature can always be overridden; after entering the trapdoor one would only find another encrypted message, not accessible through any trapdoor. At the same time government agencies or suppliers that are forced to use only the Clipper chip are exposed to a massive risk: if the trapdoor can ever be opened by intruders, since then all encrypted information can become public. A malevolent enemy would in fact not divulge that they have a key to that trapdoor.

Trusting that the trapdoor keys can be truly kept secret over long term is obviously fallacious. If the torturous and limited bandwidth path provided by paper messages delivered via a chief of an intelligence agency can be bought for less than $ 2 million [ref Ames scandal], it is obvious that freeway access to all encrypted data in the U.S. can be purchased through somebody for a small fraction of the value of an illicit off-ramp.

Many potential sellers exist for alternate encryption technology, since such expertise was developed in all the world's intelligence agencies. Encoding valuable information does provide the best protection against compromise and legally sanctioned access. There is no economic inducement for legitimate or illegitimate businesses to use the Clipper chip. We will have to leave in world where the privacy protection afforded by cryptography will be equally available to all, no matter what their objectives are, and law-enforcement agencies will not be able to monitor illegitimate traffic on the information highway.

If the boundary of an area is formally defined and defended, we speak of a firewall. Within the firewall are a number of computing nodes, connected by some network. Access to the network from the outside is limited to one or a few nodes, and these nodes will check if the accessor is authentic and authorized. The degree of checking varies greatly, and firewalls must be carefully maintained and updated to deal with new types of threats as they develop. Firewalls can also protect against annoyances, as unwanted email (spamming) or, in reverse, disallow insiders to access undesirable places on the net, as games or pornographic sites. Areas that require a high degree of protection may dedicate one or more computers to the protection function, to avoid the risk that non-security motivated customer changes break the firewall.

Methods used by the firewall service include:

On the storage side of the firewall data can be retrieved and freely shared. On the user-side of the firewall only * authenticated users can have access according to their authority they have been granted [Dadaism ref]. Programs that operate across the firewall must be written and validated with care, less effort is needed for other modules if we can guarantee that they stay on their side of the firewall.

!! make consistent firewall, barrier, fence, firewall, all defining protection domain, versus end-to-end security. Note that routers need not to encrypt.

Figure Borde (=fence) in Dadaism system.

Figure Layer model with implied permissions. Top secret on the inside. maybe in the shape of a round mountain.

Any information flow to lower layers requires *declassification. Declassification requires having knowledge about the content, and authority assigned to a responsible person. Such authorization is typically obtained manually. Automation to allow release of aggregated data whose privacy should be protected has been proposed and is feasible, but risky scenarios can be created.

Example: Salary of president of the company by statistical inference.

Even if the inference problems are not a concern, it is hard to attain a high confidence in the security of a software system. Formal mathematically-based technology is being developed, and automatic verification of secure behavior of modules in a clean architecture may soon be accomplished. In the meantime the verification is being performed by expert visual analysis of the module code. The verification of a system composed of many modules brings new challenges. The complexity and adaptability of substantial software-based systems makes comprehensive verification, if attempted, suspect..

[WAVE chip technology ATP]

Figure Flow through network, with security assignment an flows, related to layered model.

If flow in the insecure direction is needed then a specialized node, a *security mediator, is installed in the network. It is best if the security mediator resides in a private node, a computer not used for any other tasks. Only that node needs to support *multi-level security (*MLS). The simplest version of a security mediator only provides services to the *security officer, the person who has the authority to declassify information. A request for information from a source at a higher classification level is submitted to the security mediator. The security officer can display, edit, and if appropriate modify the request, release it for processing by causing it to enter the layer of the source and be transmitted to that source. The information comprising the response is also transmitted to the security mediator, so that the security officer can inspect the result, modify it if it is too revealing, and release the approved result for transmission to the requestor at the more public layer.

A simple security mediator can greatly speed-up the tedious paperwork prevalent today where much of the requests and results are transmitted in paper form, and reentered at their destination. The simplicity of the task makes comprehensive validation feasible and trustworthy. The performance of the overall system is minimally impacted. MLS systems, because of the time involved in their verification, tend to be a generation older than the best general processing systems that can be obtained. Now only the security mediator has requires MLS capability. Since its processing task is simple, poor performance will not impact the work carried out in the other nodes.

As the workload submitted to a security mediator increases, the task of security officer can be aided by automatic application of *release rules provided by the officer. Release rules could identify attributes of the database that are normally publicly releasable, they could constrain the aggregation being requested and being actually performed, and they could monitor successive requests which are in their totality suspicious and require fall back on manual release processing. By providing tools to reduce the workload for the security officer labor time is freed up that could be devoted to be responsive to queries too complex for automation and to monitor the overall security of the system's operation.

Examples of security rules, assuming operation within a commercial company:

Documents require another level of protection than money. Once a twenty-dollar bill changes hands, you have lost all rights to that bill, and, if the money must be returned, other bills, coins, or checks can be used. A Digibox (tm), as being provided by Intertrust, is an encrypted and authenticated datastructure. Such a datastructure can be stored anywhere and transported. The creator or subsequent owner of a document can place it in a document, and make it available to potential consumers. It can be forwarded to wholesalers and distributors without opening it.

Intertrust software is reequired to decrypt it, but must be given keys. A consumer who changes any bits in it will not be able to decrypt it. The Digibox is active, and can report to a clearinghouse when it is legitimately opened, so that billing can occur on a per-use basis. Multiple digiboxes are needed to deal with alternate payment schemes. To protect payment from subversion the Digibox can only be opened inside an Intertrust Commercenode (tm). That node drives the printer and display, making electronic cut-and-paste impossible.

The privacy of the reader can also be protected, since the information is mediated by the clearinghouse. This will put additional requirements on the clearinghouse and it is not yet clear if readers will be willing to pay for privacy, although such anonymity exists today when you read documents in private.

There is information that must stay secure. Isolated systems and paper documents provide a simple level of security available to all. Encryption provides an effective method of protection if transmission over public facilities is required. Cryptography is well understood and can be implemented to provide nearly any level of security. Mismanagement of keys is the greatest risk, including the loss of a key, which will in effect cause loss of the encrypted information. An encryption system that can be legally broken into by government agencies, as discussed in the Section on trapdoors is not likely to be accepted. Allowing the government to have trapdoors on the information highway violates expectations of privacy, just as cars on a U.S. highway cannot be searched unless there is a violation or an outstanding warrant. Having a system with a trapdoor also presents an intolerable risk, since theft of the trapdoor key exposes all information.

!!from above: Current operating systems have unlimited privileges to the users files, although that should not be neccessary>

| name | fullname | technology | services | [ref] | |

|

|---|---|---|---|---|---|

| RSA | (bought out 15 April 1996 by Security Dynamics - maker of the one-time card; for $250M) | | | |||

| TIS | Trusted Information System | SI | | | ||

| Rainbow Technologies Inc, Irvine CA | | | ||||

| Alladin Knowledge Systems Ltd, Tel Aviv | (bought FAST Software AG, Munich April 1996 for $36.2M) | | | |||

| Security Dynamics | Security Dynamics | password generator cards | authentication | www.securID.com | | |

| SST | Stanford Secure Technologies | content checking | privacy protection | www.2ST.COM | || |

Previous chapter: Mediators -

Previous chapter: Mediators -

Next chapter: The Future

Next chapter: The Future

CS99I  home page.

home page.

-------------

Attack by foreign government

Industrila espionage

Theft

Vandalism and mischief

Pervasive and ubiquitous

Nooeed vomprehensive approach

Prevent Unauthorized access

Unau.. Denil aof access

Third party authenyication validation

Security controls for connectong to networks

Flexible enclaves - ditr . Virtial communities Secure interoperation

Enclaves -- security does not get in tehe way Designate systems without/within enclave perimeter

Near-trm : toolkits for Sec. admin, integrationm emergency resp

Experimnts Long term HPCC, Mobile ,, DigLib, ElComm, sensitive (Healthcare) Confinement technologies

Referene SKIP project at ETH Zurich [Germano Carroni] uses two level

keymanagmemt (by handling certificates. Model has secure islands, subislands and outposts.

Consider differences in client-server and peer-to-peers.

In client server model the set of candidate ckients is unknown (unless subscription).

In-line encryption is now feasible at little or no loss. With authentication net bandwidt is reduced.

Note: RSA patent expires in 1997?

focuses in key mangemnt to define the domains.

Which layer? Application -> kernel {session, transport}-> hardware

Recommends high level

WG IP security of IETF composed of architecture(RFC1825), authentication(1826, 1828) confidentiality (1827, ), key management (draft form only)

Shared secrets cannot easily use RSA, since symmetric.

Videoservers are unidirectional, simpler.

Tina consortium (Bellcore, Fujitsu, many) to move telecom towards sharable, single, architecture. !growth>.

Domains called schemes of authentication.

Booz-allen wg.10

Simple model, cenral holder, hierarchical assignemnet. rules to lower level can have their own rules for subsidiary certificate assignment.

Lower levels could violate tight rules set and promised at high levels.

Also neeeds recovery of lower level node dies. Fatal if top level node dies.

Distributed trust model makes user holds own key, travels through systems, where are they keys then. A long distance in terms of intermediate crtificate holders among signer and verifier leads to distrust. Need faciities to monitor, repaor, modify policies and systems, also in case of faults.

Vijay Varadharajan CSD, Un.West.Aust, Sydney.

Enterprise-wide security control.

DCE now only in server. Has privilge managemnt module, with acess lists. in, drived concptually from Kerberos DCE 2.0 too have public key from server. Check OSF votes?

UWA developing distinct authentication server.Their research prototype, supported by HP, provides tokens to certificate privileges. Can accoubt for aggregate use, as being limited to $10,000 withdrawal per week. Uses some public key techn. Open: how to propagate policy cahanges among domins (assumption: identical)

Have a policy language (no inheritance, positive (multiple levels) and (strong) negative authorizations.

Kerberos [MIT handles it all] plans to update with public key caapability.

Vesna Hassler, dep of distr systems TU vienna (advisor Posch) Collaboration security: Includes 1. Multi-party secure communication ports. 2. Group-oriented cryptography, as for teleconferencing, not served well by mutiple pairwise agreements. 3. Workflow security. 4. Application specific security.

Whitfield Diffie et l. at ASAFE panel 1Jul19996

Note objectives fof encryptor, and surveiller,

Yhe latter want to be able to filter many msgs to locate items of concern.

30 bit key decry (or 56)iable by PC

40 expoert limit

To lowr to be secure for WS attack today, done by MIT

doable at $8900 (prce to FBI $16,000.

To high for filtering by intercept device

60 bit (0r current 56 bit DES) also doable. With gate arrays

cost you $1,000,000 to break in 7 days.

90 not doable with cureent technology for document valid lifetime

120 bit secure in the foreseeable in the future

Good cryptography resaerch outside of the US. 90%papers are foreign, lets hamstringing in foreign products. Viz Jogoslavs working in Sweden.

Today 65 countries produce 270 cryptographic devices, available to all good guys and crooks.

NS!P>A motto in the 1980s: In God we trust, the rest we monitor.

[per Whitfield Diffie panel, 1996]

From national security poit of view what are the cost of encryption.

NSA wantted first to be able to break crtpto through ex[port control. Led to an crypto arms race. Made things actually worse, and so now tgey are hiding behind law enforcement agencies.

But law enforcemnt (as the FBI) only needs to decrypt docs, not do surveillance.